- The Gen Creative

- Posts

- How Google’s Veo 3 AI Video Generator is Changing Content Creation

How Google’s Veo 3 AI Video Generator is Changing Content Creation

+ Midjourney Unveils NewV1 Video Model for AI-Driven Video Generation

The Gen Creative

Today’s Creative Spark…

How Google’s Veo 3 AI Video Generator is Changing Content Creation

Midjourney Unveils NewV1 Video Model for AI-Driven Video Generation

Adobe Firefly just launched on mobile — here’s what creators can do with it

Why Your AI Images Come with Errors—And How to Improve Them

How AI is redefining interior design

AI is turning complex creative workflows into effortless magic, making professional-grade video and design accessible to everyone—anytime, anywhere

Read time: 6 minutes

Video Generation

Source: Geeky Gadgets

Summary: Google's Veo 3 AI Video Generator is changing the content creation landscape by converting simple text scripts or images into professional-grade videos in minutes, no editing expertise required! This powerful platform automates complex editing tasks like scene transitions and color correction while offering template libraries, custom branding, AI voiceovers, and multi-platform optimization. From marketing campaigns and educational tutorials to social media content and corporate training, Veo 3's streamlined six-step process makes high-quality video production accessible to creators of all skill levels across diverse industries.

Five Essential Elements:

Automated Production Pipeline: Six-step process from login to export handles complex editing tasks like scene transitions, color correction, and audio synchronization automatically for professional results.

Comprehensive Template Library: Pre-designed templates tailored for various industries and content types, enabling quick customization with brand colors, logos, and watermarks for consistent identity.

AI-Powered Content Conversion: Text-to-video technology transforms written scripts into visually engaging narratives, while voiceover integration adds AI-generated or custom audio narration.

Multi-Platform Optimization: Export capabilities specifically formatted for YouTube, Instagram, TikTok, and other platforms, ensuring content performs optimally across different social media environments.

Versatile Industry Applications: Supports marketing campaigns, educational content, social media posts, corporate training, and event highlights, demonstrating broad utility across professional and creative sectors.

Published: June 16, 2025

Video Generation

Source: Midjourney

Summary: Midjourney showcases its groundbreaking V1 Video Model with image-to-video animation that brings static creations to life. This strategic stepping stone toward real-time open-world simulations offers automatic and manual animation modes with high/low motion settings, plus the ability to extend videos up to 20 seconds total. At just "one image worth of cost" per second and 25x cheaper than market competitors, users can animate both Midjourney-generated images and external uploads. This makes professional video creation accessible to everyone while building toward an ambitious future of interactive 3D environments.

Five Essential Elements:

Excellent Pricing Model: Video generation costs only 8x an image job (equivalent to one upscale), delivering "one image worth of cost" per second—25x cheaper than existing market solutions.

Flexible Animation Options: Automatic mode generates motion prompts independently, while manual mode allows custom movement descriptions, with high/low motion settings for different scene requirements.

Extensible Video Creation: Initial 5-second videos can be extended roughly 4 seconds at a time up to four extensions total, creating up to 20-second animated sequences.

Universal Image Compatibility: Animate both native Midjourney images and external uploads by dragging images to prompt bar and marking as "start frame" with custom motion descriptions.

Strategic Technology Vision: V1 Video serves as building block toward ultimate goal of real-time open-world simulations combining visuals, movement, 3D space navigation, and interactive environments.

Published: June 18, 2025

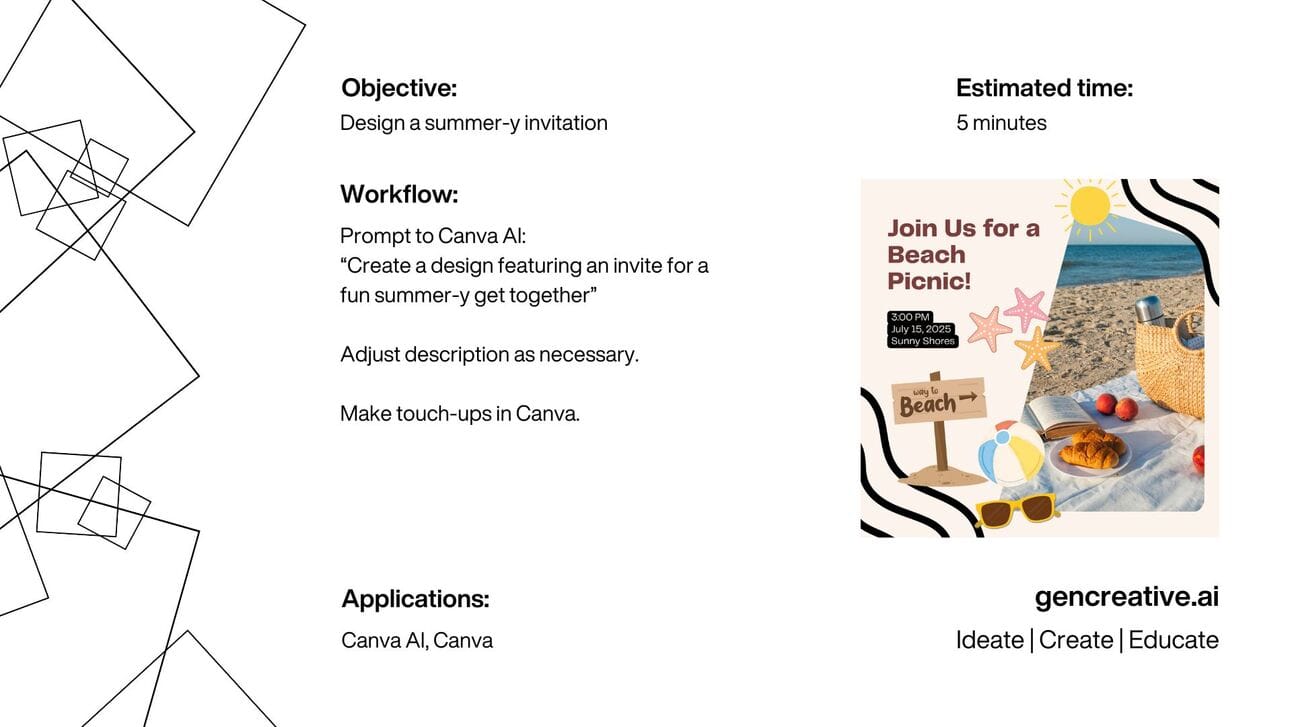

Workflow by The Gen Creative

In each newsletter, the Gen Creative team puts together a practical creative workflow so you can get ideas of how to implement AI right away. Want to see more? Check them out here!

Image and Video Generation

Source: tom’s guide

Summary: Adobe Firefly breaks new ground with its first dedicated mobile app, bringing powerful AI image and video generation directly to iOS and Android devices! This game-changing platform combines text-to-image, text-to-video, and image-to-video tools with seamless Creative Cloud sync across Photoshop and Premiere Pro. What sets it apart is multi-model integration featuring Google Imagen, OpenAI, Luma AI, Runway, and others in one interface, plus commercially safe content trained on licensed materials—making professional-grade AI creation truly portable for the first time.

Five Essential Elements:

Mobile-First AI Creation: First major platform bringing text-to-video generation to smartphones, enabling high-quality visual and 5-second 1080p video clip creation directly from iOS and Android devices.

Multi-Model AI Hub: Integrates Adobe's own models with Google Imagen, OpenAI, Luma AI, Runway, Pika, Ideogram, and Flux engines in one unified interface for diverse creative experimentation.

Commercial Safety Focus: Models trained exclusively on licensed or public-domain material, eliminating copyright concerns and enabling worry-free commercial use for professional creators.

Seamless Workflow Integration: Automatic Creative Cloud syncing ensures projects flow effortlessly between mobile Firefly, desktop Photoshop, Premiere Pro, and web platforms without workflow disruption.

Advanced Video Controls: Text-to-video tools include motion, angle, and zoom controls plus Generative Fill and Expand features, creating social-ready content without requiring traditional editing suites.

Published: June 17, 2025

Image Generation

Source: UNITE.AI

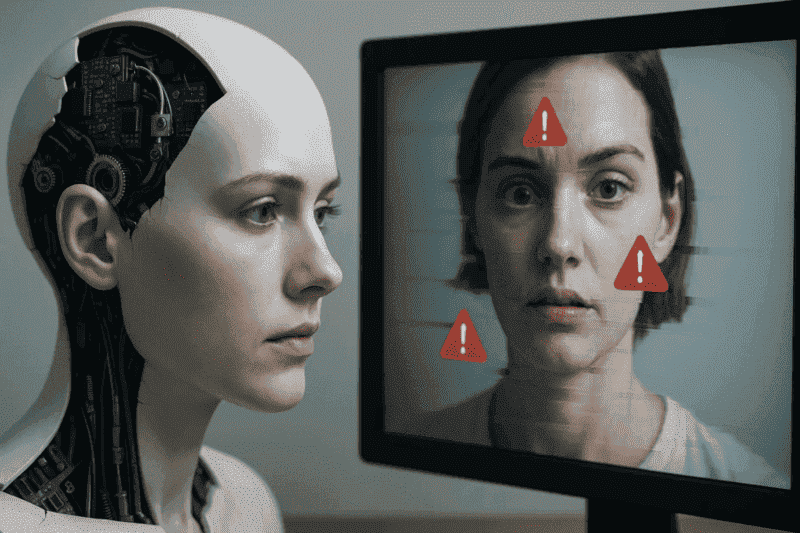

Summary: AI image generation is becoming incredibly powerful, and understanding how to work with its quirks unlocks amazing creative possibilities for both beginners and seasoned artists! While challenges like prompt complexity, anatomical accuracy, and identity consistency exist, the AI community has developed brilliant solutions that turn these limitations into opportunities for creative mastery. With the right techniques and tools, creators can achieve stunning professional-quality results that perfectly match their artistic vision—transforming AI from a random generator into a precise creative partner.

Five Essential Elements:

Master Prompt Engineering: Use visual prompt builders, community-tested prompt libraries, and standardized syntax guides to craft effective prompts, while newer models like FLUX make the process more intuitive and forgiving.

Perfect Anatomical Accuracy: Deploy LoRAs trained on anatomical datasets, ControlNets with pose estimation, and anatomy-aware correction tools to eliminate issues like extra fingers and create realistic human proportions.

Achieve Identity Consistency: Leverage reference image conditioning, embedding techniques (PuLID, IPAdapter, InstantID), and face swap models to maintain perfect character continuity across multiple generations for storytelling projects.

Create Coherent Backgrounds: Apply depth mapping, perspective guides, relighting LoRAs, and specialized environment datasets to generate realistic spatial relationships with proper lighting and shadow integration.

Gain Precise Creative Control: Utilize ControlNets, custom style models, masking tools, and post-processing enhancers to direct AI output exactly where you want it, transforming probabilistic generation into intentional artistry.

Published: June 17, 2025

Interior Design

Source: NNS MAGAZINE

Summary: AI is fundamentally reshaping interior design with explosive market growth from $1.09 billion in 2024 to a projected $15.06 billion by 2034, driven by 65% professional adoption and tools like Midjourney, DALL·E, and Interior AI! The technology excels in visual support through generated moodboards, spatial optimization via layout algorithms, personalization of style and mood, and rapid production of renders and prototypes. However, AI serves as enhancement rather than replacement—designers become strategic curators selecting from algorithmic alternatives while maintaining human sensitivity for ergonomics, technical constraints, and authentic taste that understands real-world needs.

Five Essential Elements:

Explosive Market Growth: AI interior design market surging from $1.09 billion in 2024 to projected $15.06 billion by 2034, with 65% of design professionals already integrating AI tools into workflows.

Four Core Applications: AI excels in visual support (generated moodboards), spatial optimization (layout algorithms), personalization (style and mood matching), and rapid production (rendering and prototypes).

Advanced Tool Integration: Platforms like Midjourney, Interior AI, and Canoa Supply transform text prompts into hyper-realistic renders and reconfigure real photographs into new spatial designs.

Human-AI Collaboration: Designers evolve into strategic curators selecting optimal algorithmic alternatives while preserving essential human judgment for ergonomics, technical constraints, and authentic aesthetic taste.

Hybrid Future Vision: All design software integrating AI capabilities by 2026, creating spaces born from algorithms while maintaining irreplaceable human sensitivity for real-world functionality and emotional resonance.

Published: June 18, 2025

Remote Creative Jobs

5 Remote Startup Creative Jobs

Interior Designer: Hiring a Remote Interior Designer with 5–10 years’ experience, strong FF&E and CAD skills, and fluency in Portuguese and English to lead high-end residential and hospitality projects.

Videographer: Common Ground USA seeks a Videographer to lead a 2025 national video campaign promoting peace and collaboration, delivering three platform-optimized videos over 45 days across 3 months, with a focus on storytelling, licensing, and production coordination in Texas and Pennsylvania.

Senior Photography Editor: Wallpaper is hiring a Photo Editor (12-month FTC, £33–35k, hybrid in London) to commission and brief photographers across design, fashion, travel, art, and more—supporting editorial production with momentum, creative relationships, and strong image rights knowledge.

Lead Game Producer: Magic Media is hiring a Lead Game Producer to manage multiple cross-functional teams and game projects from concept to launch. You’ll drive Agile production, optimize pipelines, and ensure delivery across platforms.

Creative Producer: Hypebeast is hiring a Creative Producer (NY) to lead campaign shoots, timelines, and budgets. 2+ years’ experience and strong cultural fluency required.

See you next time!

AI is becoming a steady presence in creative work. 🧑💻🖍️ From visual design and sound editing to story development, it’s offering everyday support as ideas come together. 🖼️🎙️ Whether arranging images, adjusting audio, or refining text, AI tools are helping smooth out the process. 📸🎧 Take a closer look at how technology is becoming part of creative routines. 🔧🪄

How did you like it?

We'd love to hear your thoughts on today’s Creative Spark! ✨ Your feedback helps us improve and tailor future newsletters to your interests. 📝 Please take a moment to share your thoughts and let us know what you enjoyed or what we can do better. 💬 Thank you for being a valued reader! 🌟

Keep Reading

These 10 hidden Photoshop techniques will supercharge your design workflow and reclaim hours of creative time! AI-powered Generative Fill seamlessly extends backgrounds and removes objects while Camera Raw Filter provides non-destructive advanced editing. Smart Objects preserve original quality through unlimited scaling, spring-loaded tools enable lightning-fast switching by holding shortcut keys, and Bird's Eye View navigation makes massive canvas work effortless. Master Quick Mask Mode for intuitive selection painting, use number keys for instant brush opacity control, and leverage Actions to automate repetitive sequences. Color-coded layer management with filtering options streamlines complex projects, while the Frame Tool makes mockup creation a breeze, collectively reclaiming hours of your work week for pure creative focus.

MIT's AI restoration breakthrough delivers 66x faster results than traditional methods, completing months-long projects in single-day timeframes through automated analysis and reconstruction. The system uses ultra-precise color matching algorithms that map thousands of unique hues with artist-level accuracy, learning original creators' intent to fill gaps with uncanny detail precision. Revolutionary razor-thin polymer film masks apply digitally reconstructed elements without touching original canvases, remaining completely removable to preserve artwork authenticity and soul. High-resolution scanning provides comprehensive damage analysis, identifying different deterioration types to create systematic layered restoration plans addressing cracks and losses. This human-AI collaboration maintains professional oversight with conservators guiding application while AI handles complex analysis, transforming centuries-old masterpiece restoration from painstaking craft into precise, reversible digital innovation.

Generative AI is supercharging design thinking by accelerating ideation, prototyping, and expanding creative possibilities while keeping human needs at the center! This powerful technology serves as a catalyst rather than replacement, processing qualitative feedback during empathy phases, proposing unexpected variations in brainstorming, and generating interface mockups for rapid prototyping. Companies like Spotify, healthcare organizations, and financial services are already leveraging AI to analyze user feedback, simulate patient journeys, and craft personalized digital experiences—proving that AI has evolved from novelty to strategic partner in user-centric innovation.